Key Concepts and Detailed Discussion:

1. Introduction to Forecasting:

Forecasting is a critical function in management, involving the prediction of future events to facilitate effective planning and decision-making. It helps managers anticipate changes in demand, set budgets, schedule production, and manage resources efficiently. Accurate forecasting minimizes uncertainty and improves operational efficiency.

2. Types of Forecasts:

Forecasts can be classified based on the time horizon they cover:

- Short-term Forecasts: Cover periods up to one year and are mainly used for operational decisions like inventory management and workforce scheduling.

- Medium-term Forecasts: Span one to three years and assist in planning activities such as budgeting and production planning.

- Long-term Forecasts: Extend beyond three years and are used for strategic decisions, such as capacity planning and market entry strategies.

3. Forecasting Models:

Forecasting models are generally categorized into three types:

- Time-Series Models: These models predict future values based on past data patterns, assuming that historical trends will continue. Examples include moving averages, exponential smoothing, and ARIMA (Auto-Regressive Integrated Moving Average).

- Causal Models: These models assume that the forecasted variable is affected by one or more external factors. Regression analysis is a commonly used causal model.

- Qualitative Models: Rely on expert opinions, intuition, and market research rather than quantitative data. Common qualitative methods include the Delphi method and market surveys.

4. Time-Series Forecasting Models:

Time-series models analyze past data to forecast future outcomes. The main components in time-series analysis are:

- Trend: The long-term movement in the data.

- Seasonality: Regular patterns that repeat over a specific period, such as monthly or quarterly.

- Cyclicality: Long-term fluctuations that are not of a fixed period, often associated with economic cycles.

- Random Variations: Unpredictable movements that do not follow any specific pattern.

5. Moving Averages and Weighted Moving Averages:

The moving average method smooths out short-term fluctuations and highlights longer-term trends or cycles. It is calculated as:

$$

\text{Moving Average} = \frac{\sum \text{(Previous n Periods Data)}}{n}

$$

A weighted moving average assigns different weights to past data points, typically giving more importance to more recent data. The formula for a weighted moving average is:

$$

\text{Weighted Moving Average} = \frac{\sum (W_i \cdot X_i)}{\sum W_i}

$$

where (W_i) is the weight assigned to each observation (X_i).

6. Exponential Smoothing:

Exponential smoothing is a widely used time-series forecasting method that applies exponentially decreasing weights to past observations. The formula for simple exponential smoothing is:

$$

F_{t+1} = \alpha X_t + (1 – \alpha) F_t

$$

where:

- (F_{t+1}) is the forecast for the next period.

- (\alpha) is the smoothing constant (0 < (\alpha) < 1).

- (X_t) is the actual value in the current period.

- (F_t) is the forecast for the current period.

7. Trend Projection Models:

Trend projection fits a trend line to a series of historical data points and extends this line into the future. A simple linear trend line can be represented by a linear regression equation:

$$

Y = a + bX

$$

where:

- (Y) is the forecasted value.

- (a) is the intercept.

- (b) is the slope of the trend line.

- (X) is the time period.

8. Seasonal Variations and Decomposition of Time Series:

Decomposition is a technique that breaks down a time series into its underlying components: trend, seasonal, cyclic, and irregular. This method is particularly useful for identifying and adjusting for seasonality in forecasting.

- Additive Model: Assumes that the components add together to form the time series. $$

Y_t = T_t + S_t + C_t + I_t

$$

- Multiplicative Model: Assumes that the components multiply to form the time series. $$

Y_t = T_t \times S_t \times C_t \times I_t

$$

where:

- (Y_t) is the actual value at time (t).

- (T_t) is the trend component at time (t).

- (S_t) is the seasonal component at time (t).

- (C_t) is the cyclic component at time (t).

- (I_t) is the irregular component at time (t).

9. Measuring Forecast Accuracy:

The accuracy of a forecast is crucial for effective decision-making. Common metrics used to evaluate forecast accuracy include:

- Mean Absolute Deviation (MAD):

$$

\text{MAD} = \frac{\sum |X_t – F_t|}{n}

$$

- Mean Squared Error (MSE):

$$

\text{MSE} = \frac{\sum (X_t – F_t)^2}{n}

$$

- Mean Absolute Percentage Error (MAPE):

$$

\text{MAPE} = \frac{100}{n} \sum \left| \frac{X_t – F_t}{X_t} \right|

$$

where:

- (X_t) is the actual value at time (t).

- (F_t) is the forecasted value at time (t).

- (n) is the number of observations.

10. Adaptive and Exponential Smoothing with Trend Adjustment:

More advanced smoothing techniques, such as exponential smoothing with trend adjustment, use two smoothing constants to account for both the level and the trend in the data. The formula is:

$$

F_{t+1} = S_t + T_t

$$

where:

- (S_t) is the smoothed value of the series.

- (T_t) is the smoothed value of the trend.

11. Application of Forecasting Models:

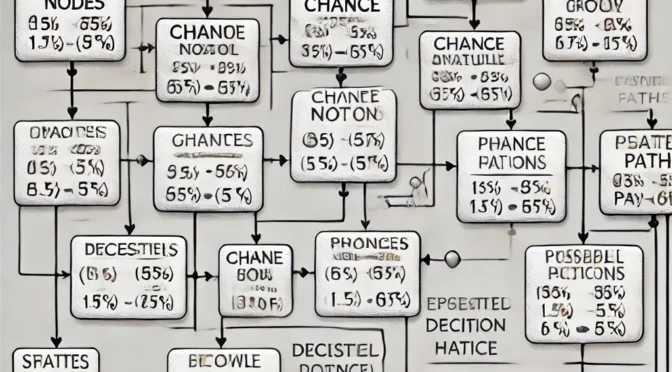

Selecting the right forecasting model depends on the data characteristics and the specific decision-making context. For instance, time-series models are suitable for short-term forecasts, while causal models are better for long-term strategic planning.

12. Limitations of Forecasting:

Forecasting is not an exact science and has limitations. It relies heavily on historical data, which may not always be a reliable predictor of future events. Unforeseen events, changes in market conditions, or other unpredictable factors can lead to forecast inaccuracies.

By understanding these concepts and selecting the appropriate models, managers can make informed decisions that align with their strategic goals and operational needs. Forecasting remains a fundamental tool in the arsenal of effective management.